(Alternative title: I aten’t dead)

My name’s Anthony and, like a lot of other people, I suffer from depression. It’s not something I like to talk about — my normal philosophy is just to filter out that part of my life and just talk and think about the interesting and fun parts of life. The downside is that when it gets particularly bad, everything gets filtered out and I more or less just vanish.

Sometimes you see depression and similar things linked to creativity, pointing at various artists or thinkers throughout history, and saying “maybe without the suffering, the would never have achieved so much”; or occassionally you get the more pessimistic view: maybe smart people are depressed because the world’s cruel and that’s just the rational response. Ignorance isn’t just bliss, but in this life it’s the only way to be happy at all.

I’m not really in a position to critique those arguments — I tend to find them both fundamentally vile, to the point that any arguments I might make against them might just as easily be illogical justifications of pre-existing bias than anything sound. Which isn’t to say I haven’t been attracted to them — being able to say “this is just how anyone creative ends up, it’s not my fault” is a lot better than “not only do I suck, but I suck even more because I can’t deal with how much I suck”, and the idea that there’s a harmless way out — that you just have to have a different lifestyle and this will all get easier — can be pleasant too.

These days at least, I tend to look at it more like a hamstring injury; it happens, medicine might help you get better, and the only relationship it has to who you are is that you might be unlucky enough to be more easily injured than some people, and the harder you push yourself, the more likely you are to have some sort of injury. The difference, for me, is that with that view it becomes a risk than you can reduce, or some damage that you can probably eventually heal from, rather than the part of your personality that allows you to be who you are.

Getting medical help isn’t easy, or at least wasn’t for me. The first time I tried was in my last year of uni. I’d stopped being able to talk to anyone, I couldn’t see the point of anything I was doing, I’d not do assignments, or do them, then just not hand them in (which was… confusing). At one time at least, I couldn’t even make it more than a few steps out the door trying to go to class out of sheer existential terror — the blue sky and sunlight was just too much for me to take. At some point it was all more than I could handle and I went to see my local doctor; who ran me through the depression questionnaire of the time, diagnosed me with mild depression, and gave me a prescription for, I think, Effexor XR, which is a SNRI anti-depressant. Possibly at the same time, or maybe a little later, I also got a prescription for diazepam to help with the panic attacks I was having (“Do you have panic attacks?” “N… wait. Does randomly falling to the floor at the top of some stairs because life terrifies you count? I guess so then…”). I looked up both the drugs on the internet when I got home, finding out all the scary stories about increased suicide risk and other side effects from the anti-depressants, and finding out that diazepam is more commonly known as valium. Finding out I was the sort of person who took valium and anti-depressants was unpleasant in and of itself.

At some point, after a few months I guess, I gave up on the anti-depressants. I’m not entirely sure why; I still had some pills left, so it wasn’t just out of fear of going back to the pharmacy or the doctor to get more, I think it was just that I hated trying to use drugs to, as I saw it, modify my personality, and hated the implied admission that I couldn’t cope on my own. I stopped pretty much cold turkey, which as I understand it is one of the worst things you can do, but oddly don’t think I ended up having any effects from the drug at all, either taking it or not.

That, and the original comment that it was just a “mild” depression, left me not really sure if it was even depression as compared to me just somehow beaing even lamer than I’d thought — how can it be depression if anti-depressants have no effect? And if I’m this hopeless — dropping out of uni, not talking to anyone for months or more — with just a mild depression or none at all, that makes me pretty pathetic, because there are plenty of folks out there with more major depressions or generally worse circumstances who deal with their lives better than I was doing with mine.

Of course, even if you’re letting your life slip through your fingers, you can’t just do nothing; least of all having just been used to being busy from doing double load semesters at uni. For me, that meant distracting myself by hacking on Debian stuff — which had the benefits that it was somewhat social without having to actually talk to anyone, that it was probably a useful contribution to the world, and that it was technically difficult. Lying in bed, afraid of the sunlight, not talking to friends, on drugs that didn’t seem to do anything, with a laptop and needing some way to distract myself from how horrible I was or some way to prove I wasn’t completely useless, is the other side of how testing and ifupdown came to actually be, right at the very start.

Since then, I gradually got to the point where I could deal with people again, and while I still regret it, not finishing my second degree or honours at uni became a fait accompli, and not something I had to keep stressing myself over. Which isn’t to say there aren’t a million other things to stress myself over, and my life since then has been a more or less defined by struggling with that. Anything at all useful or clever you can ever do won’t be perfect, and any particular flaw could’ve been avoided if you weren’t a horrible person. Anyone who ever says anything nice, is only doing so because they’re just naturally kind and don’t really understand why their kindness is misplaced in this case, or is really only trying to manipulate you, or maybe both. On the other hand, anyone who says something mean or tells you how stupid you are, well, they might be right, but on the other hand, it’s hard to be really bothered because ultimately, they don’t know the half of it.

So I pretty much orbited there for a while, just taking a valium every now and then when I’d get too scared, and putting up with the bad times, and trying to make the good times worth the effort. Eventually that got too hard, and I went to the doctor to see about trying anti-depressants again. It was a different doctor in a different area this time, one that I was a lot more comfortable with (which is to say, not terribly comfortable), and the prescription this time was for Lovan, which, again when I looked it up at home, turned out to be an SSRI called fluoexetine, also known as prozac.

On the upside, it actually had an effect — after about a month or two of taking it I had what would probably have to be called a manic episode over about a weekend. Which sounds bad from the outside, and possibly is, but when you’re on the inside a couple of days of slightly crazy bliss and loving absolutely everything after years of just finding different reasons for being down is a really pleasant change. Fortunately, it was just a one off, and my mood settled back to having regular ups and downs, but without going anywhere near as far down, and weathering stresses with a bit more personal equanimity. It also meant that I really had been depressed, so even if I was coping badly, at least there really was something bad to cope with in the first place.

Anyway, blahblah, moving on, etc, getting my prescription renewed last year the doctor suggested that having been on prozac for a while now, I should be trying to wean myself off it, and basically give my body a chance to handle naturally what the prozac was assisting. I tried that, and it worked fairly well — over a period of some months I dropped down about a third dosage with no particular ill effects. With that having worked okay, rather than getting a renewal when that prescription ran out, I just stopped. There were a few withdrawal effects this time — some overly vivid dreams, and a weird feeling of dizziness that wasn’t quite dizziness every now and then, but that was about it.

Except that that wasn’t it for all that long. A bunch of stresses had been adding up over the past year or two, and by January I was more or less aching for some sort of a break, and not really being sure I was actually capable of anything really interesting anyway. The hackfest and some of the general chatting around lca was a real boost on the latter score, but every time I found chances to just do any of the less stressful things I thought I wanted, for various reasons, I found myself not taking them. I don’t really know where things go from there; at its simplest I found myself getting frustrated and confused, quit most of my roles in Debian, went back on Lovan, stopped talking to people, stopped doing more or less anything, then wrote a blog post. I think, maybe, I know what I’m going to choose for myself next, and I’m kinda looking forward to it, but I don’t think I’m quite ready to commit to a whole bunch of future stress yet either.

Anyway, like I said, it’s something I prefer to filter out, and there’s more than a little filtered out from the above too. So I guess in conclusion, for anyone wondering what I’ve been up to lately, the answer’s “nothing much”.

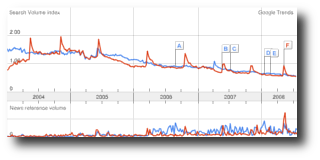

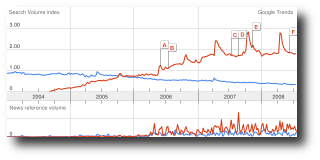

and because it shares a lot in common with Debian. Some things in common: both Debian and Ubuntu are trying to release a top notch, multi-platform, free, Linux based operating system; both are based on the deb package format, dpkg and apt; both had a large number of key developers in common over 2005-2006; both are based on almost exactly the same source. The key differences, then, seem to me to be these: Ubuntu is younger; Ubuntu spends money; Ubuntu relies on

and because it shares a lot in common with Debian. Some things in common: both Debian and Ubuntu are trying to release a top notch, multi-platform, free, Linux based operating system; both are based on the deb package format, dpkg and apt; both had a large number of key developers in common over 2005-2006; both are based on almost exactly the same source. The key differences, then, seem to me to be these: Ubuntu is younger; Ubuntu spends money; Ubuntu relies on